A Chronological Look At AI: A Decade-by-Decade Evolution

Here we cut through the noise to deliver a clear, compelling history of this transformative technology; Artificial Intelligence and Machine Learning. From the theoretical roots of the 1940s to the generative AI boom of today in the 21st century; from the McCulloch-Pitts Neuron Model to the state of deep learning in 2025. The focus will be on discoveries that proved key to the existence of other ideas or even led to more discoveries after they were introduced. We trace the key milestones, the brilliant minds, and the surprising turns that shaped the field from a bird’s eye view, as simple as we can make it.

Discover why moments like the AlexNet breakthrough and the rise of Nvidia’s GPUs were more than just incremental steps—they were the foundational sparks that ignited the AI revolution. We reveal how major tech giants like Google, Tesla, and Meta have driven innovation, open-source contributions and reshaped industries with their advancements. Whether you’re an AI enthusiast or just curious about how we got here, this post provides the essential context you need to understand the forces at play. It’s a journey through time, a story of human ingenuity, and a look at the future of intelligence itself.

Part I: The Foundational Decades (1940s-1970s)

The intellectual seeds of artificial intelligence were sown long before the first computer could even hum. The post-war era, fueled by a blend of mathematics, neuroscience, and engineering, laid the theoretical bedrock. It was a time of abstract concepts and philosophical debate, asking a question that still echoes today: Can machines think? 🤔

1943: The Neural Dawn

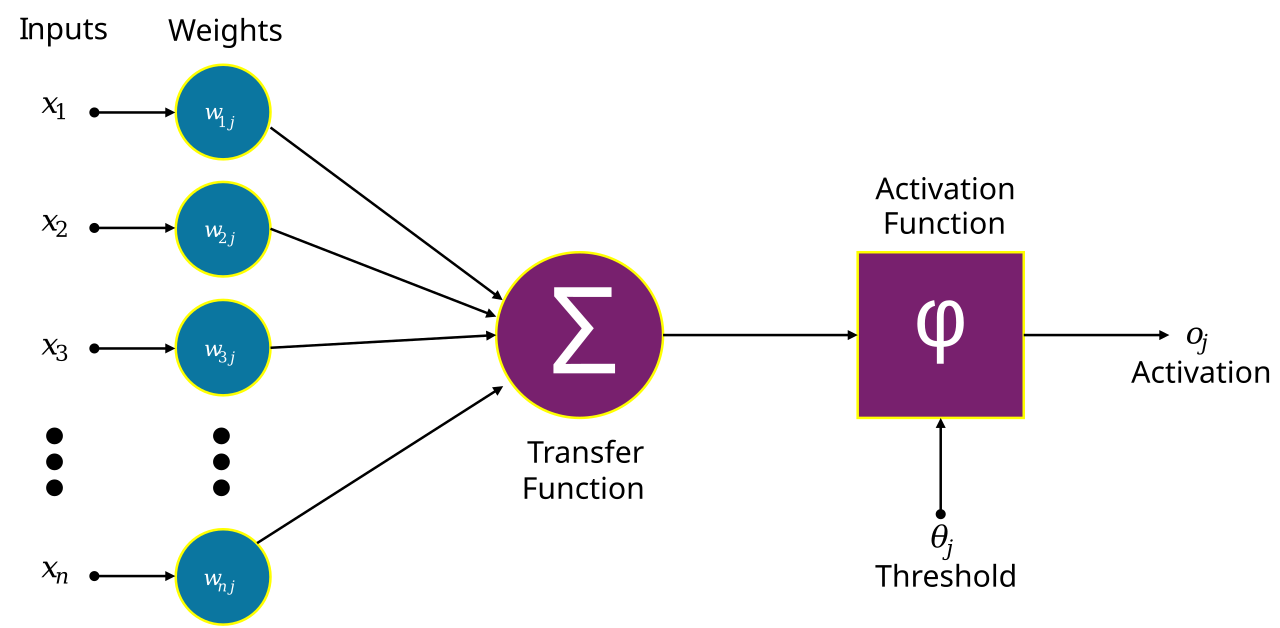

The 1940s were marked by groundbreaking theoretical work. In 1943, Warren McCulloch and Walter Pitts published a seminal paper that modeled artificial neurons as logical circuits. This was a radical idea that provided the first mathematical model of a neural network. This period also saw Norbert Wiener coin the term “cybernetics” to describe feedback and control systems, which greatly influenced early AI’s focus on self-regulating machines.

- McCulloch-Pitts Neuron Model: The first mathematical model of neural activity. Read the original paper. In this work, taking inspiration from how the human brain works, the neuron was modeled as a sum of the product of inputs and weights which was then passed to a non-linear activation function.

- Birth of Cybernetics: Norbert Wiener’s work on feedback systems. Explore Wiener’s legacy.

1948-1950: Turing’s Test and Intelligent Machinery

The 1950s turned theory into practice. In his 1950 paper, Alan Turing, the computing pioneer, proposed the “Imitation Game” (now known as the Turing Test), posing the ultimate question: Can machines think? This work bridged theory and application, setting the stage for decision-making algorithms.

- Computing Machinery and Intelligence: Turing’s classic paper on machine intelligence. Read the full paper.

- First Neural Net: In 1951, Marvin Minsky and Dean Edmonds built the SNARC, the first artificial neural network, using vacuum tubes. See SNARC details.

- First Machine Learning Program: In 1952, Arthur Samuel’s checkers program became the first to demonstrate true machine learning by improving its game through experience. Learn about Samuel’s work.

1956: The Birth of AI

AI’s official birth certificate was signed in the summer of 1956 at a historic workshop at Dartmouth College. Organized by John McCarthy, Marvin Minsky, and others, this event formally established “artificial intelligence” as a new discipline. It was here that Allen Newell and Herbert Simon unveiled the Logic Theorist, a program that could autonomously prove mathematical theorems.

- Dartmouth Workshop: The official coining of “artificial intelligence.” Read the proposal text.

- Logic Theorist Debut: The first program to solve math theorems. Explore the original demo.

1958-1969: Expert Systems and Backpropagation Roots

The following decades saw a clash of paradigms. Frank Rosenblatt’s Perceptron brought the idea of learning from data to the forefront, while John McCarthy’s Lisp became the dominant programming language for symbolic AI. The focus shifted towards expert systems, programs that encoded human knowledge in rules to solve complex problems.

- Perceptron Invention: Rosenblatt’s single-layer neural network. Read the paper.

- ELIZA Chatbot: Weizenbaum’s 1966 program mimicked conversation. Learn about the code.

- Shakey the Robot: SRI’s 1969 mobile robot that integrated vision and planning. See Shakey in action.

However, a significant critique in 1969 by Minsky and Papert’s book Perceptrons highlighted the limitations of simple neural networks, leading to the first of several “AI winters”; periods of reduced funding and interest in the field. This moment explains why neural network research went dormant for decades, only to re-emerge stronger later on.

Part II: The Quiet Revolution (1980s-2010s)

After the initial boom and bust, AI’s journey became a quieter, more technical pursuit, laying the essential groundwork for today’s explosive growth. This era was defined by two key undercurrents: the slow, steady progress in neural networks and the emergence of parallel computing hardware.

1980s: The Expert Systems Boom and AI Winter

The decade saw a commercial frenzy for expert systems, but overpromising led to the second AI winter by the late 1980s. However, behind the scenes, a few key developments were bubbling under the surface. In 1986, a seminal paper by Rumelhart, Hinton, and Williams repopularized backpropagation, a critical algorithm for efficiently training multi-layered neural networks.

- Backpropagation Popularized: The key algorithm for deep learning. Read the 1986 paper.

- Bayesian Networks: Pearl’s probabilistic reasoning, which allowed AI to handle uncertainty. Read the 1985 book.

1990s: Hardware and Strategic Victories

The next decade brought two major triumphs that hinted at AI’s future power. In 1997, IBM’s Deep Blue became the first computer to defeat a reigning chess world champion, Garry Kasparov. It was a monumental public display of AI’s strategic prowess. That same year, the Long Short-Term Memory (LSTM) network was invented, which gave neural networks the ability to remember information over long sequences—a crucial innovation for everything from speech recognition to language translation.

- Deep Blue Beats Kasparov: The first computer chess world champion. Match recap.

- LSTM Invention: A key breakthrough for sequence modeling in neural networks. Read the 1997 paper.

- Nvidia’s Founding: In 1993, Jensen Huang and his team founded Nvidia, a company that would eventually create the hardware engine for the entire AI industry. Nvidia company history.

2006: The Deep Learning Revival

The mid-2000s marked the dawn of the true deep learning revolution. This era was defined by three key ingredients: big data, massive computational power, and renewed focus on neural network research. The launch of Amazon Web Services (AWS) in 2006 democratized access to scalable computing, while Fei-Fei Li’s ImageNet project provided the massive, labeled dataset needed to train robust computer vision models.

- Hinton’s Deep Belief Nets: A breakthrough that showed deep nets were viable. Read the 2006 paper.

- AWS Public Launch: Amazon’s cloud service enabled scalable AI training. AWS history.

- ImageNet Begins: Fei-Fei Li’s dataset that would fuel the vision AI boom. Project site.

- Google Translate Launch: Google’s early ML-powered tool to automate language barriers. Google Blog.

Part III: The Deep Learning Renaissance (2012-2021)

2012: The AlexNet Breakthrough 💥

The year 2012 is a pivotal turning point in the history of artificial intelligence. At the annual ImageNet Large Scale Visual Recognition Challenge (ILSVRC), a team led by Geoffrey Hinton unleashed AlexNet, a deep convolutional neural network (CNN) that shattered all previous records. It achieved a top-5 error rate of just 15.3%, a staggering improvement over the previous year’s best of 26.2%.

This wasn’t just another incremental win; it was a paradigm shift. AlexNet’s success, powered by Nvidia GPUs, demonstrated that deep learning wasn’t just a theoretical curiosity but a practical, scalable approach to solving real-world problems. Its victory unleashed a frenzy of research and investment, making CNNs the de facto standard for computer vision. Without this moment, the generative AI boom of the 2020s might have been delayed by years. Google amplified the momentum with its Knowledge Graph, an AI-driven semantic web that enhanced search understanding.

- AlexNet Victory: 15% error reduction on ImageNet via CNNs. Read the 2012 paper.

- Google Knowledge Graph: Semantic AI boosts search relevance. Google Blog.

2014-2016: Generative Models and Go Mastery

- 2014: The invention of Generative Adversarial Networks (GANs) by Ian Goodfellow opened the door to a new era of generative AI, allowing two neural networks to “compete” to create more realistic images and data. In a major strategic move, Google acquired DeepMind, bringing its deep learning and reinforcement learning expertise in-house. That same year, Tesla rolled out its first Autopilot beta, marking AI’s entry into consumer vehicles.

- GAN Invention: Goodfellow’s adversarial training for images. Read the 2014 paper.

- Google Acquires DeepMind: Brings RL and deep learning firepower. Google AI Journey.

- Tesla Autopilot Launch: AI-assisted driving debuts in Model S. Forbes.

- 2016: DeepMind’s AlphaGo stunned the world by defeating the human Go champion, Lee Sedol. Unlike Deep Blue, which relied on brute-force calculation, AlphaGo used a blend of deep learning and reinforcement learning, showcasing an almost intuition-like style of play.

- AlphaGo vs. Lee Sedol: A 4-1 win via Monte Carlo Tree Search + DL. Match coverage.

- Tesla Autopilot 2.0: Neural nets for vision-based autonomy. Analytics Vidhya.

2017: Transformers and the NLP Revolution

- 2017: A seemingly innocuous paper from Google titled “Attention Is All You Need” introduced the Transformer architecture. By replacing traditional recurrent layers with a more parallelizable “attention mechanism,” it revolutionized how models process sequences of data. This was the key that unlocked the modern era of Large Language Models (LLMs). That same year, Meta (then Facebook) entered the fray by open-sourcing PyTorch, a flexible deep learning framework that quickly became a favorite among researchers. AWS also launched SageMaker, a cloud platform for building and training machine learning models at scale.

- Transformer Model: The paper that enabled scalable NLP. Read the 2017 paper.

- Meta PyTorch Release: Flexible DL framework for researchers. Meta AI.

- AWS SageMaker: Cloud ML service for large models. Launch announcement.

2018-2021: Scaling and Generative Creativity

- 2018: Google released BERT, a bidirectional transformer model that drastically improved the ability of LLMs to understand context. That same year, DeepMind made a leap in biological research with AlphaFold, an AI that could accurately predict protein structures.

- BERT Pretraining: A groundbreaking NLP model. Read the 2018 paper.

- AlphaFold Protein Structures: DeepMind’s 3D predictions. CASP win.

- 2019: DeepMind’s AlphaStar became the first AI to defeat professional players in the real-time strategy game StarCraft II, while Tesla unveiled its Full Self-Driving computer, a custom AI inference chip for end-to-end autonomy.

- AlphaStar Grandmaster: Mastering the real-time strategy game StarCraft II. DeepMind blog post.

- Tesla FSD Computer: AI hardware for real-time driving decisions. Analytics Vidhya.

- 2020-2021: OpenAI’s GPT-3 demonstrated few-shot learning, hinting at AGI. The generative AI wave truly began cresting with OpenAI’s DALL-E, which blended text and vision to produce surreal images. In 2021, Tesla announced Dojo, a supercomputer designed to train video-based AI on petabytes of driving data.

- GPT-3 Release: The few-shot learner that transformed tasks. Read the paper.

- DALL-E Debut: Text-to-image generation goes mainstream. OpenAI blog.

- Tesla Dojo Supercomputer: Custom AI training for autonomy. Analytics Vidhya.

Part IV: The Generative AI Explosion & The Age of Agents (2022-2025)

The AI landscape of the 2020s has been defined by two major themes: democratization and integration. AI is no longer a hidden engine running in the background; it’s a co-pilot, a creative partner, and an indispensable tool touching every aspect of our lives.

2022: AI Goes Viral 🚀

The year 2022 will be remembered as the year AI broke out of the lab and into the mainstream. The open-sourcing of Stable Diffusion democratized high-quality image generation, allowing anyone with a modest GPU to create stunning art from text. But it was the launch of ChatGPT in November that truly changed everything.

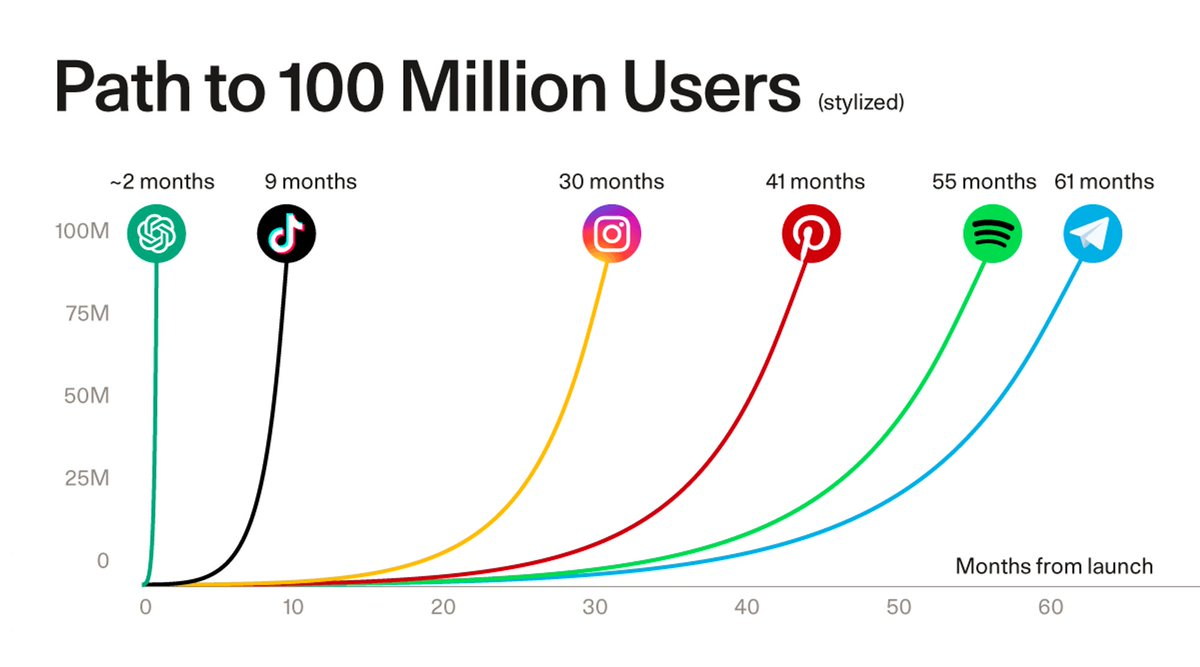

Here’s a graph from twitter user Jesse Middleton that illustrates how popular ChatGPT was on launch. It compares the user adoption rate to other major consumer platforms like Spotify and Instagram.

Built on the foundation of GPT-3 and fine-tuned for conversation, ChatGPT’s intuitive interface made AI feel simple and approachable. It amassed 100 million users in a record two months, proving that there was a massive appetite for accessible AI. This moment thrust generative AI into the global spotlight, igniting a frenzied race among tech giants.

- Stable Diffusion Launch: A public release of a diffusion-based text-to-image model. Read the announcement.

- DALL-E 2 Release: Enhanced image synthesis with inpainting. OpenAI intro.

- ChatGPT Launch: The accessible LLM interface goes viral. See usage stats.

2023: The Race for Supremacy

The momentum from 2022 carried into a year of explosive innovation and fierce competition.

- Multimodal Models: OpenAI’s GPT-4 was released with the ability to process not just text but also images. This was a crucial step towards creating AI with a more holistic understanding of the world. New competitors emerged, with Google launching Gemini, a natively multimodal model, and Anthropic’s Claude focusing on safety and helpfulness.

- GPT-4 Technical Report: Multimodal capabilities with vision integration. OpenAI blog.

- Gemini 1.0 Release: Google’s December multimodal family excels in benchmarks. Google announcement.

- Claude 2 Launch: Anthropic’s update emphasizes safety and helpfulness. Anthropic blog.

- Open-Source Power: Meta’s decision to open-source its LLaMA 2 models catalyzed a vibrant ecosystem of community-driven AI research and development. Meta News.

- The Regulatory Reckoning: This rapid progress also sparked a global conversation about the risks and ethical implications of AI, leading to international summits and the first wave of regulatory proposals.

- AI Pause Letter: An open letter urging caution on giant AI experiments. Future of Life.

- Bletchley Declaration: 28 nations commit to safe AI. UK gov.

2024 & Beyond: The Age of Agents

Today, in 2025, the focus has shifted from mere “chatbots” to autonomous AI agents—systems that can carry out multi-step tasks and interact with software and the real world on their own. We’re seeing this in everything from Tesla’s Optimus humanoid robot to the proliferation of AI tools that can book flights, manage calendars, and write code.

- Apple Intelligence: On-device gen AI in iOS. Apple’s WWDC 2024.

- Figure 01 Robot: Humanoid feats in manipulation. Figure AI demo.

- Gemini 2.0: Google’s frontier multimodal AI. Google Blog.

- Tesla Optimus and Robotaxi: Tesla pushes toward robot deployment and autonomous mobility. Battery Tech Online.

- Meta Llama 3 and Meta AI: Meta’s open models and assistant launch. Meta AI.

The AI race isn’t just about who has the biggest model anymore; it’s about who can create the most efficient, capable, and seamlessly integrated AI. While costs plummet and open models close the performance gap, the most significant breakthroughs are no longer just in academia but in the real-world deployment of AI into products and services that we use every day.

Conclusion: An Unfolding Story

The journey of artificial intelligence is a testament to human curiosity and technological ingenuity. From the theoretical musings of Turing to the silicon-fueled agentic swarms of today, the field has undergone dramatic shifts and explosive growth. The milestones of the past—the Logic Theorist, Deep Blue, and especially AlexNet’s GPU-powered coup—weren’t just victories; they were the essential sparks that ignited the inferno of innovation we are witnessing today. As AI matures, it promises to reshape our reality in ways we are only beginning to comprehend.

How AI was used to write this post:

To write this post it is only fair that some amount of AI was involved. Grok from xAI wrote the first draft of this post and Gemini AI from Google acted as the chief editor to refine the final post. Gemini decided the placement of images and videos within the post. Meta AI was used to create some images but they did not make it to the final draft because the chief editor, editor generated the cover image for this post by itself. Here’s an imperfection, If you look closely at the cover image at the top of this post you should notice some spelling mistakes that are not immediately evident.

Reader, this looks like a nice spot to let you go after telling you all the great advances AI has made, albeit with a warning. You can see that AI models keep improving but are not yet perfect as illustrated in this very post.